For my birthday, I bought myself a new motherboard. The previous motherboard was speedy; but, not stable. I pulled out an ASRock X370 Taichi and put in an MSI X470 Gaming Plus.

I kept everything else the same: RAM, video card, storage. So far, the MSI motherboard is performing admirably.

Three little snags I ran into:

- Backups were a pain.

- Sound card appeared to not work (but probably did).

- Btrfs is not reconfiguration friendly.

I never did get Clonezilla to work as a backup. I’d bought an external USB hard drive from Costco last year I think. No matter how many times I tried to put a partition on it, it would err out. I think this was because the drive had an MBR (Master Boot Record) config on it instead of GPT (GUID Partition Table). Ultimately, I booted off of a GParted live DVD, and wiped the external USB that way. Then created an ext4 partition for the whole 5 terabytes. From there, rebooted back into OpenSuSE and used rsync. Specifically:

su -

mount /dev/sdc1 /mnt

rsync -aAXv /home /mnt

That took a while. But after I got a backup of my home directory, I was free to start taking apart hardware. 🙂

But yeah, I started around 9:00 AM, and only got the good backup going by 11:30 AM. Cryptic error messages are cryptic.

The Taichi motherboard removal actually went reasonably easy.

What did delay me a little bit was that when I first installed the Noctua NF-S12A PWM system fan, I installed it 90° off; the cable from the fan was about a finger’s width too far away from the motherboard connector. Although it was super easy to remove the Noctua – it has rubber posts and grommets instead of screws (which make it super quiet) – putting it back in to the case was slightly difficult. During the initial build, the fan went in first, so using the needle nose pliers to pull on the stretchy polymer posts was easy. But this time, the power supply and motherboard are already in there, and I don’t really want to have to pull all that out for one corner of the fan mounting. Eventually I got it, but it wasn’t easy.

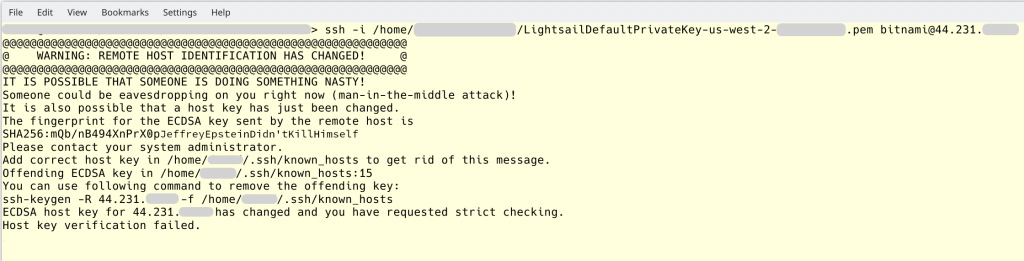

Boot the machine up, and things are looking pretty good. But I have this fear that sound and Linux are enemies, so I go in to YaST and test the sound. The sound tests fail. Following instructions though, SDB:Audio troubleshooting specifically this test:

speaker-test -c2 -l5 -twav

did produce sound! So sound is working after all. It’s just something in YaST the fails to to produce the test sound. Apparently.

All I really know is that I got to the point where I disabled the second sound card (it’s built into the video card), rebooted, and decided to just try Youtube. Youtube worked. I had sound and everything. I’ll call that a win.

HOWEVER, now it’s time to bring in my files for my home directory, from my backup. And I had forgotten to do some manual partition work during the initial install. I had wanted to wipe both /dev/sda and /dev/sdb so that during the initial install, hardware detection would find what is in the MSI X470, with no previous crud from the Taichi motherboard be hanging around.

But I had not bothered to manually change the partitioner to make /dev/sdb the /home directory. I figured I could do that later. I figured wrong.

Under previous systems, it was pretty easy to delete /home on /dev/sda3 and then configure Linux to mount /home on /dev/sdb1 instead.

Btrfs was having none of that. And if I thought the gparted errors about the external USB partitions were cryptic – this took obfuscation a whole new level.

The good news is that I’d already done all the copies to /dev/sdb1 (from the external USB backup on /dev/sdc1 ), so that work wasn’t wasted.

And indeed, it was easier to just wipe /dev/sda and install all over again. This time, during partitioning, I specified the existing /dev/sdb1 to be mounted as /home and Btrfs left it’s grubby mitts off my home directory disk.

Finished the reinstall, deleted the second audio, and et voila – my machine seems almost exactly like it was this morning when I woke up. I almost can’t tell I did a whole motherboard swap out underneath; except so far no spontaneous reboots. 🙂